DeepSeek - much ado about nothing or a Sputnik moment for AI? I’ve always believed that markets are the best expression of investor sentiment - look not at what they say, look at how they trade. Gaze at my portfolio and gaze into my soul.

Below is a quick summary of our perspective at Crucible after a weekend spent reading, discussing, and analyzing with our portfolio companies and partners. Since so many folks reached out to chat, we wanted to write a quick summary in the hopes it’s helpful and provides additional context as you form your own conclusions. We’d love to hear your thoughts.

What Happened

Last week, DeepSeek published an open source AI model they claim was trained with $5M-ish of compute as a side project by a bunch of Chinese quants diddling around with excess GPUs owned by the high frequency trading firm they work for. They’ve been writing great research for a year or so, so this didn’t come out of nowhere. The v3 and r1 models were released on Wednesday but the app hit Friday. The $5M number is marginal compute cost - it does not include capex, opex, etc. Notably, DeepSeek can be used for free and is faster than OpenAI’s latest o1 release, leveraging innovative techniques to make workloads more efficient and enable faster processing.

If a small-ish team with old Nvidia GPUs built an open-source AI model that rivals OpenAI’s while using less money, chips, and energy to do it, what are the implications for the data center economy and the AI tech landscape?

While there is much hand wringing over how we got here, we think its more productive to focus on where we go next. Here is what we think *is* interesting (hint: it’s not the culture war stuff).

Breakthrough in Model Architecture: DeepSeek uses a few innovative techniques that change model architecture to boost performance and speed while using far less compute than frontier labs. It’s a very impressive display of research and engineering under a significant resource constraint. We can only imagine what’s going on at all of the frontier labs in the US and Europe right now as they work to integrate these breakthroughs into their own models. This blog post from Himanshu Dubey is a good technical primer - Deepseek-v3 101, we’re not going to pretend we’re ML engineers here.

Understanding Leverage in the AI Value Chain: So far leverage has been on closed source models / IP and compute power / capex. Now leverage is shifting, the question is where in the value chain that leverage is most durable and defensible. Open source models and free query access may force a business model change for other players. Interface loyalty might actually matter - the moat isn’t architecture, it’s delivering a product to a long-term paying user that materializes revenue. Distribution is the most valuable asset to own in the attention economy. DeepSeek is getting a ton of attention right now (#1 in App Store) but can that persist?

Implications for Capex Payers and Capex Receivers: There is worry about the level of Capex in US AI spending, especially after last week’s Stargate announcement - $100B planned, $500B total estimated - to make US the leader in AI compute. Facebook told the market it plans to spend $65B in capex on AI in 2025. The CEO of Microsoft tweeting about Jevon’s Paradox late Saturday night and Nvidia down 17% in one day (as of noon ET, Mon Jan 27) is pretty telling. Is the AI bubble going to pop? Is this an extinction level event for US venture firms dumping money into frontier labs and data center private equity throwing money at compute? We don’t think so.

The Impact on our World: Energy, Compute, Crypto

Last week, we shared a full deck on our investment approach which we lovingly call “Energy, Compute, Crypto.” We’ll rip a longer piece on that soon.

Energy

Energy is and continues to be the limiting function for the AI industry. Efficiency drives demand, and demand drives energy use. Energy will remain the foundational enabler of this growth. As with any iteration of compute systems like r1 brings with it a cascade of energy-intensive impacts:

Data Center Expansion: More facilities will be required, and existing ones will need upgrades to handle the greater demand for power and cooling.

Energy-Compute Optimization: Demand-response programs, renewable energy integration, transmission and on-site generation will need to keep pace with this scaling infrastructure.

Emerging Energy Challenges: Innovations in compute often move faster than energy grids can adapt, creating a growing need for decentralized energy solutions (e.g., microgrids, flared-gas solutions like Crusoe Energy, nuclear SMRs). We will need to continue to be more creative.

That said, some researchers believe AI power demand projections were already too high prior to the release of Deepseek. The estimates were more reflective of utilities’ desires to drastically pump up investment in grid infrastructure than what is needed in reality. Still, even if DeepSeek can drive down AI electricity consumption, the industry will continue to need marginal power. The AI arms race will only spur greater power demand.

Compute

Compute is the means of production of the future. All the hot takes stating “GPU spend is irrelevant now” are missing the bigger picture, for several key reasons; 1) commoditization of compute is something that we knew was (is) already happening 2) dollars of capital already overstated 3) efficiency will promote new adoption / new users.

Commoditization Is a Feature, Not a Bug

R1 is focused on cost-efficiency and open-source collaboration. The Jevons Paradox states that, in the long term, an increase in efficiency in resource use will generate an increase in resource consumption rather than a decrease. We have always believed that compute will be commoditized. As AI gets more efficient and accessible, we will see adoption skyrocket across more industries and use cases, turning it into a commodity that resembles the likes of oil. The commoditization of compute doesn’t signify a saturation point; it signifies a growth inflection point. Just as the industrial revolution was fueled by abundant, affordable energy, the next wave of innovation will be powered by abundant, affordable compute.

Amount of Capital Needed for Some of the Largest Projects Already Overstated

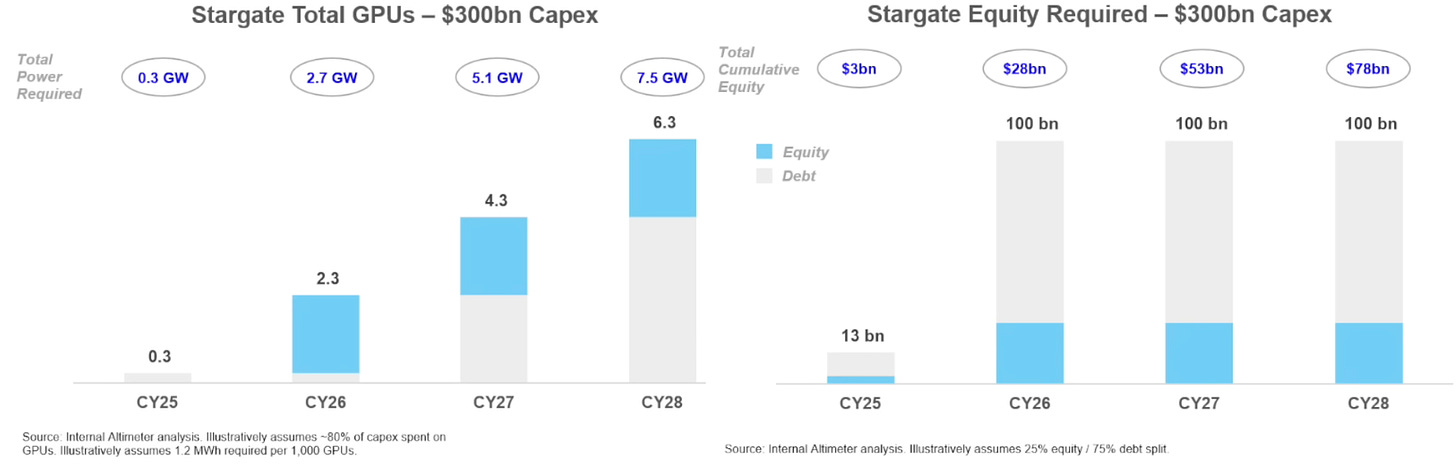

$100B needed for Stargate? Not so fast … the numbers were already off for the largest ever project ever announced. Accounting for efficiency, no one needed to show up with $100B off the bat. See back of the envelope math below (sourced from Bill Gurley’s team). Efficiency is a part of all evolution - and AI is no different. For the first year to operate 250k GPUs, they estimate that only $13B of capital will be needed in reality, with a mix of ~25% equity and ~75% debt.

Non-Obvious Industries and Use Cases will now Emerge

From our vantage point, R1 and similar initiatives are catalysts for growth in the compute economy. As AI-powered solutions become affordable and accessible, demand for compute resources will proliferate especially in energy when it comes to new applications focused on grid optimization, predictive maintenance, and energy forecasting. Each new application or vertical that adopts AI-driven tools will add to the compute demand, reinforcing the need for a robust, scalable infrastructure.

For Crucible, this trend only further proves our point; companies will continue to build and optimize data centers to meet growing compute demand. As AI models become more efficient, so will data centers, the grid, and all of the other industrials required to power our digital world. Efficiency means new business models and next gen software, and that means business for us.

Crypto

Crypto is having a come to market moment. We’ve never had more bullish catalysts driving sentiment, but the flows tell a different story. The vibes are off and the froth is settling with AI vaporware coins like ai16z down 70% from its highs. Every coin is getting an ETF. Every company is trying to IPO. Every regulation is being re-evaluated. But the market isn’t reacting.

While many on crypto twitter hailed the launch of Trump’s memecoin, $TRUMP, as validation of crypto’s staying power, the whole project felt like a night in Atlantic City - an exercise in highly efficient value extraction. The launch of a Melania memecoin 48 hours later was more of the same. Gambling has always been the frontier market for technology innovation, and memecoins feel like gambling on steroids. If you have edge, like many insiders who made a lot of money on $TRUMP did, it’s a great way to make a lot of money really fast. Otherwise, you’re the fish.

Crypto feels confused right now, much like macro. Uncertainty is a hard thing to trade, and week one of the Trump administration has been a wild ride. Bitcoin has traded like a “risk on” asset for most of its existence and when things get weird, markets tend to go “risk off.” The financialization of Bitcoin and the broader integration of crypto into capital markets makes crypto more susceptible to macro moves. But mostly, there are more tokens than ever, attention is more fragmented than ever, and it’s becoming clearer that we are, in fact, not all going to make it.

The Crypto x AI narrative has been cooking for a while but so far and there’s are dozens of different directions being explored across all domains including models, inference, data, verification, agentic payments, and more.

How does crypto make AI better or safer?

As far as databases go, blockspace is expensive and slow. It’s not clear if putting AI models, inference, and data onchain is really the right path forward with the current iteration of blockchains. We are looking to back ambitious L1s that are continuing to push speed and performance to new levels by using dedicated hardware. Compute is probably the easiest vector to think about it - blockchains facilitate aggregation and DePIN networks have demonstrated the ability for a token to be used as an effective acquisition tool to aggregate virtualized resources. There are a wide range of compute aggregators and marketplaces that have emerged, although resource availability and utilization is still miniscule (but growing). Proof of personhood - like Sam Altman’s project Worldcoin - and other types of verification are also growing use cases.

In our view, DeepSeek validates the vision that open-source protocols and public networks are going to play a critical role in how we aggregate, optimize, and financialize hardware (capex) and software (opex). Innovative protocols like DoubleZero utilizing existing installed dark fiber to build a dedicated hardware layer for crypto feels like a novel and productive innovation that can actually push us from talking about TPS (transactions per second) to delivering it on an unprecedented scale that could reasonably support the latency needed to build performant decentralized AI infrastructure. Networks like Exo are building localized resource topologies from every day devices like phones and computers and allow users to run models on these aggregated clusters.

Just as DeepSeek will likely push a step function change in building better AI models, it’s high time that crypto undergoes its own step function change in building better infrastructure capable of supporting compute, storage, and connectivity at the scale needed for AI.

How does AI make crypto better or safer?

This is where we see onchain agents deploying automated trading strategies or maximizing yields. Smart contracts and public private key cryptography aren’t intuitive for most humans but they are programmatic and therefore the language of computers. Agents could potentially play a role in removing some of the technical and UX complexity or monitoring for threats and vulnerabilities, although there are still concerns about how to do this safely especially if AGI is indeed a relevant concern.

This isn’t necessarily better or safer, but richer is always a use for blockchains, and Truth Terminal, a Twitter account linked to ChatGPT, generated a lot of attention and then turned that attention into money but launching a token - $GOAT. The token reached $50B in FDV which arguably makes it the most successful AI token and its based purely on speculation around the potential of agents issuing tokens onchain - casino, baby!

What it Means for Crucible

For Crucible - nothing changes. We are a venture fund, not a trading firm, so slow and steady wins the race. We don’t invest in semiconductors. We don’t invest in data centers. We invest in protocols, networks, and companies that enable the aggregation, optimization, and financialization of the data center economy. We started deploying capital in September, and so far:

[REDACTED FOR NON-LPS]

Equities

Lastly, public markets are always a useful proxy for the evolution of investment themes. DeepSeek was out all last week, but it seems like the mainstream narrative didn’t pick it up until the weekend, and Monday the market ended down 3% and Nvidia ended down 17%, the largest single day drawdown in any US equity. Markets have been overextended and overheated for some time, as evidenced by Oracle (lol) rallying 11% on Stargate, Oklo rallying 40% in one day on Stargate even though they don’t have a single SMR on the ground yet, and many more such cases (no shade at Oklo, animal spirits etc etc).

We expect turbulence ahead in Nvidia, Mag7, and other Capex receivers in the compute value chain. We don’t think the AI bubble pops anytime soon, but rather, that we see pricing for all things AI being completely re-written. Like any value chain, investors are beginning to realize there will be a few places where there's price leverage. Market structure shift is going to favor of vertically integrated players who can optimize across the value chain - bullish for mag7 - and specialists who sit at a leverage point. Everyone else? Gonna take some time to figure out, but we BELIEVE.

Nvidia isn’t going anywhere. Nvidia owns the critical hardware layer and has a very durable moat in the form of CUDA, Mellanox, and chip architecture.

OpenAI wowed everyone and brought tech to masses but kind of messed up everything else, particularly pricing and distribution. Perplexity is smaller and more inventive than OpenAI but not clear where its going (sidebar: I’m an avid Perplexity user).

Microsoft will be Microsoft. They’re going to just throw money at things and maybe their moat will persist maybe it will erode. Intel was the big behemoth until it wasn’t.

Meta is open-sourcing its Llama model and owns distribution with 50%+ of the people on planet Earth using a Facebook product on a daily basis. Meta announced they plan to spend $65B in Capex on AI in 2025, and we think it makes sense for Meta to continue to build their own models. Meta has a huge opportunity to sell AI applications to consumers, businesses, and enterprises given its existing revenue streams and convert that Capex into top line revenue growth.

Apple hasn’t announced any big data center plans, they’re focused on smaller models running locally on devices. The launch of AI within iOS was wonky at best (I haven’t found it useful, has anyone?) but given Apple has their own vertically integrated operation spanning chips, hardware, software, and app ecosystem, it makes sense for Apple to do what they do best - innovate on form factor rather than play the same game as everyone else.

There are a lot of small cap names in the semiconductor, data center, and energy value chain that we follow closely, and we’re actively tracking and talking to these firms. We get some of our best insights from understanding what’s happening at larger firms, where budgets are being allocated, what executive teams are concerned about, and how they’re prioritizing where to spend time. We’ll be sharing more on our equities research in Q2 and in our memos going forward.

Putting it All Together

We are still in the early innings of the emerging data center economy and AI narrative. Shifts are to be expected. The story is evolving, but we don’t think DeepSeek changes the game has changed as much as internet discourse would have one believe. Our job as investors is to find enduring, not ephemeral, opportunities and founders who understand the game and can ride the wave of change.

The buildout we’ve seen so far in AI is like trying to build railroad tracks for horses - we know trains are coming, we think they’re trains but we’re not 100% sure, so we’re building to the best of our abilities. We know there will be a surge in AI adoption in all industries - the demand is there, the infrastructure needs to be built, and plans are measured in years (not days or weeks or hours, like Twitter’s attention span). These shifts create opportunities for us and the founders we’re backing, because they prompt a re-evaluation of priorities across a wide range of organizations that control the flow of capital.

In short - Believe in something. Believe in AI. Believe in thermodynamics. Believe that every part of our exponentially expanding digital world will require electrons flowing through a chip somewhere. Believe in Daddy Jensen. Believe we’re going to be a Kardeshev 2 society. Believe we’re going to Mars. Believe in animal spirits. Believe in something. It is the only way forward.

Always believe in Daddy Jensen!

great humbling write up. stay still