Give Me Power, Liquid Cooling, and Transformers: Crucible Capital Datacenter Connect Recap

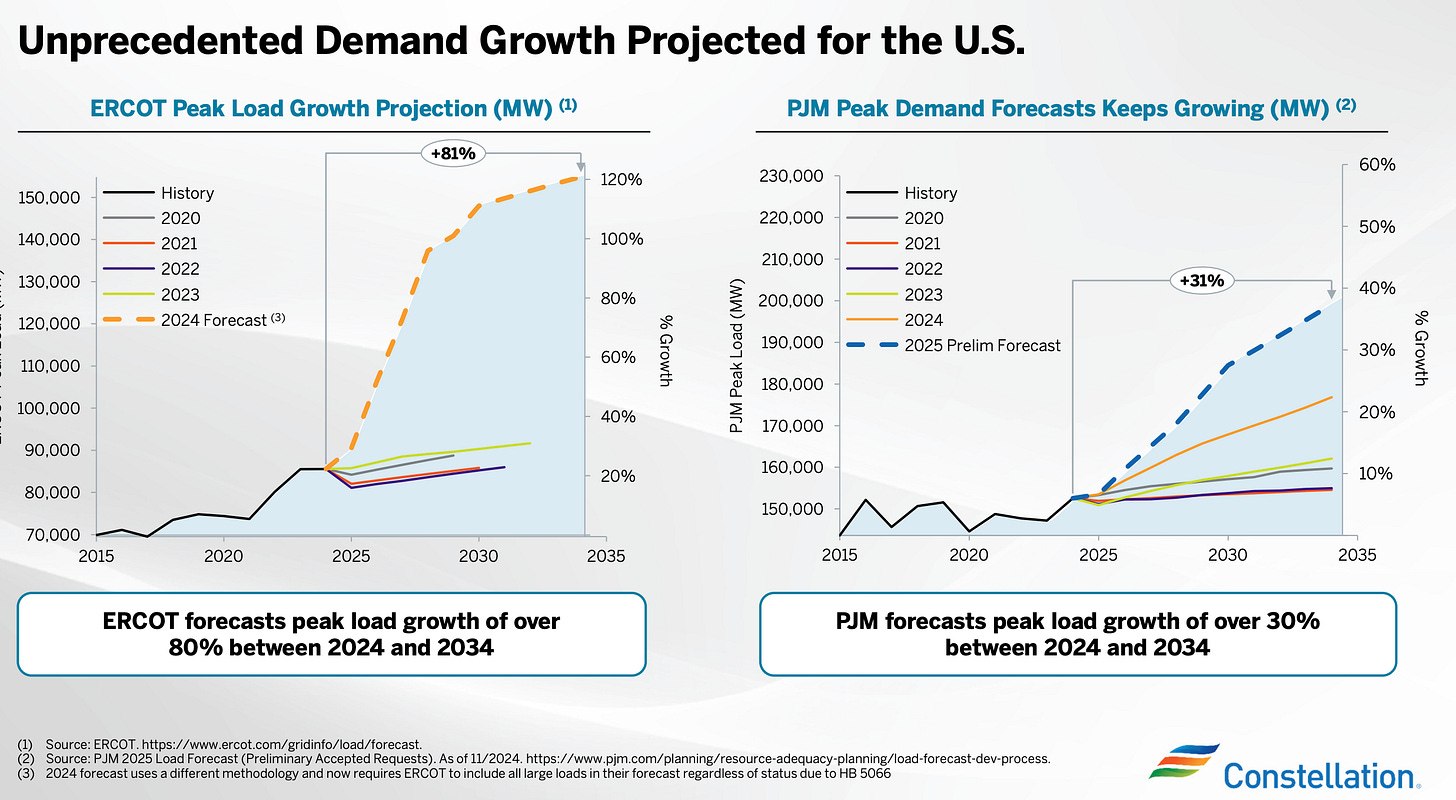

You want 600kW? You better pray to PJM.

The Crucible team spent 2 days at Data Center Dynamics’ flagship NYC conference: DCD Connect. We met with dozens of datacenter operators, infrastructure providers, builders, and DCIM developers. Our portfolio company, CentralAxis, presented their DCIM solution at the conference and we co-hosted a cocktail event to kick off the week, welcoming DCOs, DCIM providers, lobbyists, material suppliers, and investors at our NYC HQ.

The conference was overflowing and the panels were direct and engaging — with a clear focus on six themes, outlined below. Dramatically increasing rack power consumption coupled with a gaping power deficit in the U.S. are pushing operators, infrastructure builders and power producers to meet demand and operate more efficiently — creating many opportunities for investment. Our takeaways:

The AI-Driven Step Change in Power and Cooling Demands

Rack densities are rising fast, with Nvidia’s recent announcement of a 600kW/rack model underscoring just how dramatically power demands have grown — far beyond what legacy systems can support. Liquid cooling & immersion solutions and government-funded initiatives like ARPA-E’s Cooler Chips are starting to gain traction, but they face significant adoption and deployment hurdles. The average chip power draw has surged from 300 watts in 2016 to 800 watts just five years later, and now with AI driving explosive growth, the cooling challenge is even more urgent. Solutions like direct-to-chip and immersion cooling are gaining momentum, but implementation remains complex. For instance, Microsoft’s test deployment of immersion cooling on Azure workloads ran into issues, highlighting the need for further refinement. The Department of Energy is subsidizing innovation in this space through the Cooler Chips initiative, a $14M program aiming for a 10x improvement in heat rejection technologies via four distinct approaches — all expected to be viable.

Speaking with a liquid cooling infrastructure provider, there’s a flood of startups entering the space, attracted by the market opportunity. Smaller public companies are also actively making acquisitions in an effort to catch up with the large-cap leaders (Vertiv). It’s an arms race.

Energy Infrastructure Bottlenecks and Workarounds

Energy infrastructure limitations are one of the biggest constraints on datacenter expansion today. Long, multi-year lead times for critical equipment like transformers and switchgear, compounded by interconnection delays and regional capacity limits, are slowing deployments across the board. A major IPP at the event remarked that while everyone is trying to work around the transformer bottleneck, there’s no clear path forward yet.

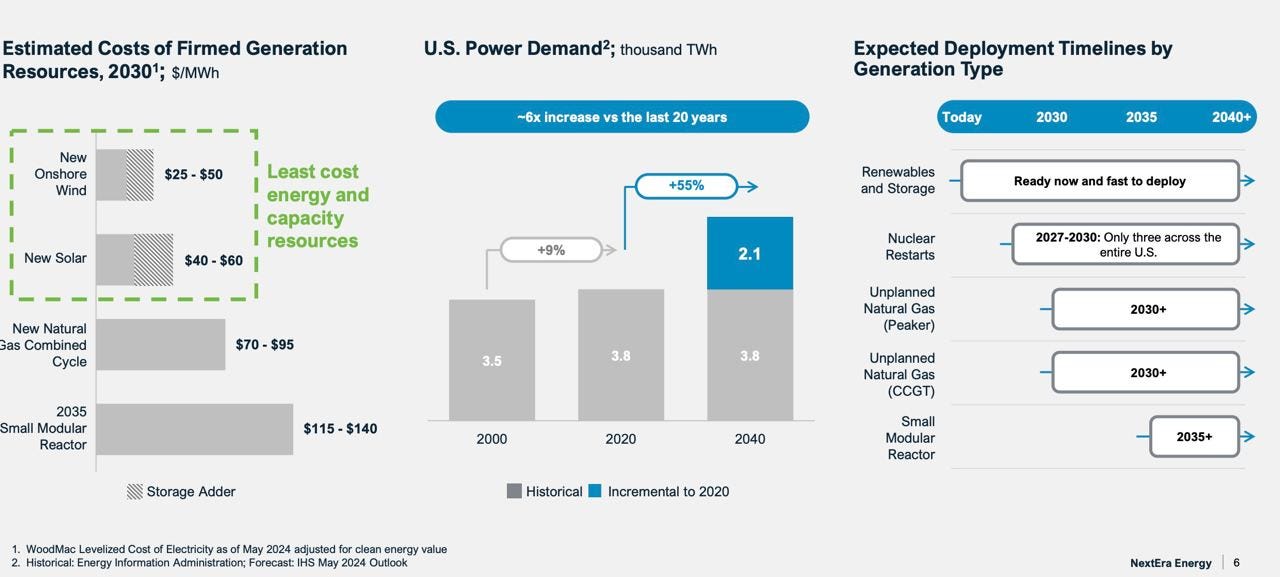

Operators are pursuing a range of creative solutions to navigate these limitations. Behind-the-meter gas is the go-to interim fix, though more clarity is needed around regulatory rule-setting, especially in ERCOT and PJM where we’re monitoring the rule making processes unfolding. Other ideas floated include islanded SMRs (still years away), restarting legacy nuclear plants (e.g. Microsoft x Constellation x Three-Mile Island), and extending the lifespans of existing nuclear plants (Constellation is focused on this). Each option has its tradeoffs, but the common thread is urgency: power procurement and delivery need to move faster to keep up with demand.

The DCIM Software Landscape is Evolving Rapidly

The evolution of DCIM is at a tipping point. As AI workloads grow more complex and power-hungry, existing infrastructure management tools are struggling to keep up. Operators are facing serious challenges with interoperability, data quality, and responsiveness to dynamic workload demands. Most operators use only a single DCIM product — integrating multiple systems into a cohesive platform has proven too difficult. Many rely on a mix of off-the-shelf and in-house tools, but concerns about data quality persist — especially when sharing data with customers.

DCIM systems were originally built for a different era — one focused on asset management and CFD modeling. Now, they must evolve to handle high-density racks and provide real-time feedback loops, predictive analytics, and smarter automation. Workloads are spiking to 600kW per rack, creating an entirely new operational paradigm. Alarm fatigue and reduced time to resolution are top of mind for operators. Predictive maintenance and analytics capabilities, like failure modes and effects analysis (FMEA), are becoming critical tools. The best returns on investment are coming not from surface-level reporting, but from solving underlying systemic inefficiencies.

Supply Chains are Becoming More Resilient and Localized

Tariffs, rising materials costs, and ongoing geopolitical instability are pushing operators and developers to localize supply chains and onshore key manufacturing processes. While lead times are improving for certain components like UPS systems, they remain long (to the order of years) for switchgear and transformers — the most critical bottlenecks in deployment timelines. To navigate uncertainty operators are leaning into pay-as-you-go procurement models and just-in-time (JIT) strategies, especially as AI workloads evolve so rapidly that buying 2–3 years in advance is increasingly impractical.

General contractors are also feeling the squeeze — no one wants to go back to their customers with a higher-than-quoted build-out price, so in many cases, tariff-related costs have already been baked into current pricing models. The U.S. market is also becoming more regionalized; whereas supply chains were much longer in 2018–2019, today’s emphasis is on localized sourcing and faster delivery. Vertically integrated players are gaining an edge, and flexible financing structures are becoming a competitive advantage. Ultimately, success in this environment will depend on strong supplier partnerships and improved visibility across the value chain.

Specialization and Modularity of Infrastructure

Infrastructure is becoming more modular and specialized to meet the unique needs of modern workloads. GPU farms and micro-modular reactors are just a few examples of how tailored design is becoming the norm. Design cycles are shortening, and with that comes a growing preference for pay-as-you-go procurement and JIT models — allowing builders to stay nimble in the face of fast-paced innovation and shifting hardware requirements.

This modularity also supports flexible scaling and site-specific optimization. As operators adapt to higher power densities and non-traditional cooling needs, the infrastructure itself is being reimagined — from the building footprint to the underlying energy and thermal systems. In this environment, agility is everything. One example of this trend is the Fire-Flyer AI-HPC architecture, which showcases how hardware-software co-design can reduce costs and energy consumption at scale. In deploying 10,000 PCIe A100 GPUs, Fire-Flyer achieved DGX-A100–level performance at half the cost and 40% less energy.

Power Procurement is Getting More Creative

Hyperscalers are feeling the heat — quite literally. They need new sites up and running within two years, not four or five. As a result, many are turning to natural gas as a stopgap and deprioritizing decarbonization goals in the near term. Interconnection delays remain a major headache, making behind-the-meter solutions an attractive, albeit complex, alternative. Again, clarity from ERCOT and PJM on rule-setting will be critical. Locating near generation on the front of the meter is also viable, especially in areas with nuclear capacity. Extending the life of existing nuclear plants is particularly promising — many are up for renewal and could sign long-term contracts as expressed by Constellation Energy.

That said, small modular reactors (SMRs) are still in a murky place — one hyperscaler described the data supporting their deployment as “wishy-washy.” Utilities are also grappling with demand forecasting. One large utility admitted it’s tough to parse how much of the current demand pipeline is real, but noted that they remain unconcerned, as they’re also planning for growth in onshore manufacturing and electrification. A client-facing hyperscaler confirmed their demand is genuine, driven by end-user needs.

Finally, curtailment needs to be taken more seriously, as pointed out by Constellation. A Duke University study found that shaving just 2.5% of peak load could add 75 GW to the grid. PJM data showed that 65% of capacity is used only 20% of the time — and 90% is used just 2% of the time. Reducing those peaks could unlock enormous capacity. The DCFlex initiative is working on exactly that: making datacenters more flexible in how and when they draw power.

Economics are coming for ecosystem moats in chip land, and this is NOT yet priced in.

Two out of two datacenter operators that we hosted at our cocktail hour are deploying AMD’s MI300x + SGLang open source inference software to outperform Nvidia’s H200s (5x throughput) for 60% of the cost + more memory in inference workloads. Coupled with efforts to build better drivers for AMD, we see potential headwinds for Nvidia dominance creating opportunities for new value chains. Expect Nvidia to go hard on acquisitions and vertical integration to push stickiness of its moat.

In conclusion… the energy-compute-industrial stack is undergoing a massive realignment — and at DCD Connect NYC, that shift was palpable. AI has broken the old models, and the datacenter industry is scrambling to catch up. Power procurement, cooling, hardware design, and DCIM software are all being re-architected in real time. We left the conference energized by the pace of innovation and validated in our conviction that this stack’s transformation will generate outsized opportunities for those willing to build (and back) the hard stuff. Crucible is here for it — and we’re just getting started. Let’s rip.

Last but not least, here are some charts we love from sell side research to tie everything together: